Successfully passing the Microsoft DP-420 exam can advance your IT career. This Microsoft guy knows. But Microsoft is the most famous certification body in the world, and passing the Microsoft DP-420 exam is not an easy task. Many test-takers try their luck but still can’t pass the exam. What are the reasons for their failure, are you curious? They did not prepare for the DP-420 exam with a proper DP-420 dumps question.

Please use the Microsoft DP-420 dumps question https://www.pass4itsure.com/dp-420.html to ensure that you successfully pass the Microsoft DP-420 exam.

PS, Microsoft-technet.com shares your latest DP-420 exam questions 1-12 to help you study, you can take free practice first, then go to download DP-420 complete dumps questions.

Take the free DP-420 exam test questions 1-12, experience dumps

q1.

You need to configure an Apache Kafka instance to ingest data from an Azure Cosmos DB Core (SQL) API account.

The data from a container named telemetry must be added to a Kafka topic named it. The solution must store the data

in a compact binary format.

Which three configuration items should you include in the solution? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. “connector.class”: “com.azure.cosmos.kafka.connect.source.CosmosDBSourceConnector”

B. “key.converter”: “org.apache.kafka.connect.json.JsonConverter”

C. “key.converter”: “io.confluent.connect.avro.AvroConverter”

D. “connect.cosmos.containers.topicmap”: “iot#telemetry”

E. “connect.cosmos.containers.topicmap”: “iot”

F. “connector.class”: “com.azure.cosmos.kafka.connect.source.CosmosDBSinkConnector”

Correct Answer: CDF

C: Avro is a binary format, while JSON is text.

F: Kafka Connect for Azure Cosmos DB is a connector to read from and write data to Azure Cosmos DB. The Azure

Cosmos DB sink connector allows you to export data from Apache Kafka topics to an Azure Cosmos DB database. The

connector polls data from Kafka to write to containers in the database based on the topics subscription.

D: Create the Azure Cosmos DB sink connector in Kafka Connect. The following JSON body defines config for the sink

connector.

Extract:

“connector.class”: “com.azure.cosmos.kafka.connect.sink.CosmosDBSinkConnector”,

“key.converter”: “org.apache.kafka.connect.json.AvroConverter”

“connect.cosmos.containers.topicmap”: “hotels#kafka”

Incorrect Answers:

B: JSON is plain text.

Note, full example:

{ “name”: “cosmosdb-sink-connector”, “config”: {

“connector.class”: “com.azure.cosmos.kafka.connect.sink.CosmosDBSinkConnector”,

“tasks.max”: “1”,

“topics”: [

“hotels”

],

“value.converter”: “org.apache.kafka.connect.json.AvroConverter”,

“value.converter.schemas.enable”: “false”,

“key.converter”: “org.apache.kafka.connect.json.AvroConverter”,

“key.converter.schemas.enable”: “false”,

“connect.cosmos.connection.endpoint”: “https://.documents.azure.com:443/”,

“connect.cosmos.master.key”: “”,

“connect.cosmos.databasename”: “kafkaconnect”,

“connect.cosmos.containers.topicmap”: “hotels#kafka”

} }

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/kafka-connector-sink

https://www.confluent.io/blog/kafka-connect-deep-dive-converters-serialization-explained/

q2.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account.

You need to make the contents of container1 available as reference data for an Azure Stream Analytics job.

Solution: You create an Azure Synapse pipeline that uses Azure Cosmos DB Core (SQL) API as the input and Azure

Blob Storage as the output.

Does this meet the goal?

A. Yes

B. No

Correct Answer: B

Instead create an Azure function that uses Azure Cosmos DB Core (SQL) API change feed as a trigger and Azure event

hub as the output.

The Azure Cosmos DB change feed is a mechanism to get a continuous and incremental feed of records from an Azure

Cosmos container as those records are being created or modified. Change feed support works by listening to container

for any changes. It then outputs the sorted list of documents that were changed in the order in which they were

modified.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/changefeed-ecommerce-solution

q3.

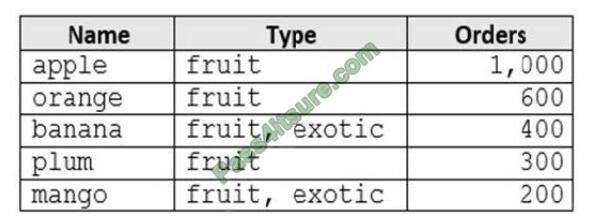

You are developing an application that will use an Azure Cosmos DB Core (SQL) API account as a data source. You

need to create a report that displays the top five most ordered fruits as shown in the following table.

A collection that contains aggregated data already exists. The following is a sample document:

{

“name”: “apple”,

“type”: [“fruit”, “exotic”],

“orders”: 10000

}

Which two queries can you use to retrieve data for the report? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

A. Option A

B. Option B

C. Option C

D. Option D

Correct Answer: BD

ARRAY_CONTAINS returns a Boolean indicating whether the array contains the specified value. You can check for a

partial or full match of an object by using a boolean expression within the command. Incorrect Answers:

A: Default sorting ordering is Ascending. Must use Descending order.

C: Order on Orders not on Type.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/sql-query-array-contains

q4.

You have an Azure Cosmos DB Core (SQL) API account that uses a custom conflict resolution policy. The account has

a registered merge procedure that throws a runtime exception.

The runtime exception prevents conflicts from being resolved.

You need to use an Azure function to resolve the conflicts.

What should you use?

A. a function that pulls items from the conflicts feed and is triggered by a timer trigger

B. a function that receives items pushed from the change feed and is triggered by an Azure Cosmos DB trigger

C. a function that pulls items from the change feed and is triggered by a timer trigger

D. a function that receives items pushed from the conflicts feed and is triggered by an Azure Cosmos DB trigger

Correct Answer: D

The Azure Cosmos DB Trigger uses the Azure Cosmos DB Change Feed to listen for inserts and updates across

partitions. The change feed publishes inserts and updates, not deletions.

Reference: https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-cosmosdb

q5.

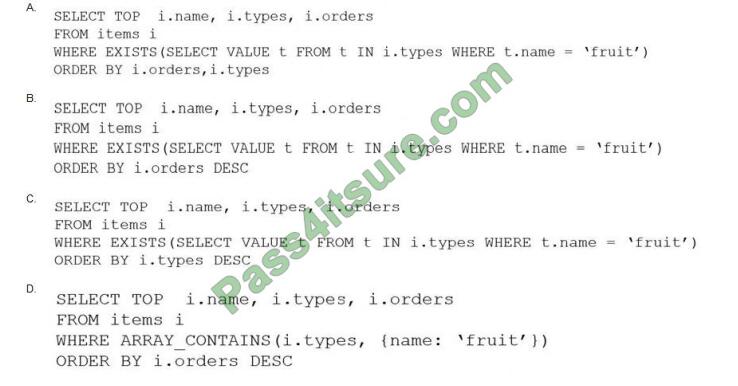

HOTSPOT

You provision Azure resources by using the following Azure Resource Manager (ARM) template.

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

Hot Area:

Box 1: No

An alert is triggered when the DB key is regenerated, not when it is used.

Note: The az cosmosdb keys regenerate command regenerates an access key for a Azure Cosmos DB database

account.

Box 2: No

Only an SMS action will be taken.

Emailreceivers is empty so no email action is taken.

Box 3: Yes

Yes, an alert is triggered when the DB key is regenerated.

Reference:

https://docs.microsoft.com/en-us/cli/azure/cosmosdb/keys

q6.

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account.

You need to provide a user named User1 with the ability to insert items into container1 by using role-based access control (RBAC). The solution must use the principle of least privilege.

Which roles should you assign to User1?

A. CosmosDB Operator only

B. DocumentDB Account Contributor and Cosmos DB Built-in Data Contributor

C. DocumentDB Account Contributor only

D. Cosmos DB Built-in Data Contributor only

Correct Answer: A

Cosmos DB Operator: Can provision Azure Cosmos accounts, databases, and containers. Cannot access any data or

use Data Explorer.

Incorrect Answers:

B: DocumentDB Account Contributor can manage Azure Cosmos DB accounts. Azure Cosmos DB is formerly known as

DocumentDB.

C: DocumentDB Account Contributor: Can manage Azure Cosmos DB accounts.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/role-based-access-control

q7.

You are implementing an Azure Data Factory data flow that will use an Azure Cosmos DB (SQL API) sink to write a

dataset. The data flow will use 2,000 Apache Spark partitions. You need to ensure that the ingestion from each Spark

partition is balanced to optimize throughput.

Which sink setting should you configure?

A. Throughput

B. Write throughput budget

C. Batch size

D. Collection action

Correct Answer: C

Batch size: An integer that represents how many objects are being written to Cosmos DB collection in each batch.

Usually, starting with the default batch size is sufficient. To further tune this value, note:

Cosmos DB limits single request\’s size to 2MB. The formula is “Request Size = Single Document Size * Batch Size”. If

you hit error saying “Request size is too large”, reduce the batch size value. The larger the batch size, the better

throughput the service can achieve, while make sure you allocate enough RUs to empower your workload.

Incorrect Answers:

A: Throughput: Set an optional value for the number of RUs you\’d like to apply to your CosmosDB collection for each

execution of this data flow. Minimum is 400.

B: Write throughput budget: An integer that represents the RUs you want to allocate for this Data Flow write operation,

out of the total throughput allocated to the collection.

D: Collection action: Determines whether to recreate the destination collection prior to writing.

None: No action will be done to the collection. Recreate: The collection will get dropped and recreated

Reference: https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-cosmos-db

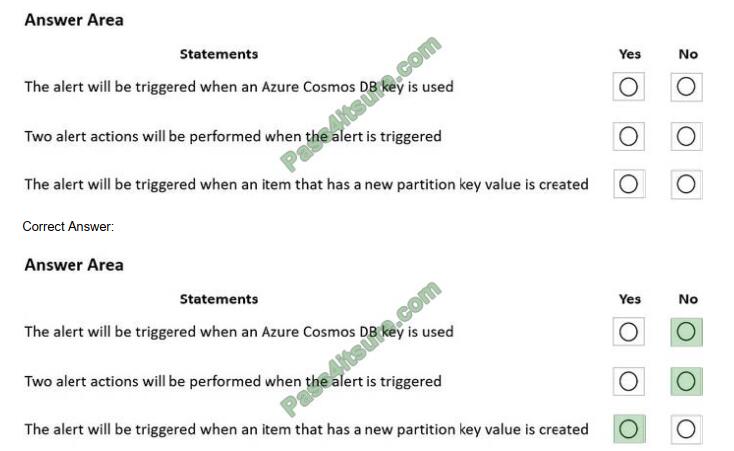

q8.

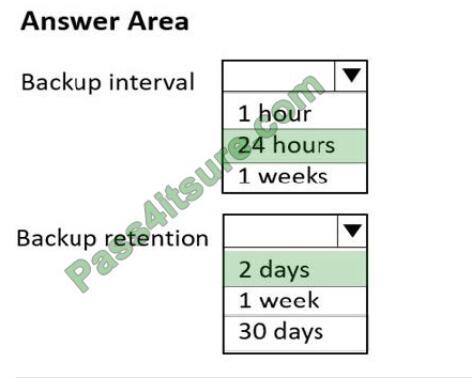

HOTSPOT

You have a database in an Azure Cosmos DB SQL API Core (SQL) account that is used for development.

The database is modified once per day in a batch process.

You need to ensure that you can restore the database if the last batch process fails. The solution must minimize costs.

How should you configure the backup settings? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

q9.

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account. Upserts of items in

container1 occur every three seconds.

You have an Azure Functions app named function1 that is supposed to run whenever items are inserted or replaced in

container1.

You discover that function1 runs, but not on every upsert.

You need to ensure that function1 processes each upsert within one second of the upsert.

Which property should you change in the Function.json file of function1?

A. checkpointInterval

B. leaseCollectionsThroughput

C. maxItemsPerInvocation

D. feedPollDelay

Correct Answer: D

With an upsert operation we can either insert or update an existing record at the same time.

FeedPollDelay: The time (in milliseconds) for the delay between polling a partition for new changes on the feed, after all current changes are drained. Default is 5,000 milliseconds, or 5 seconds.

Incorrect Answers:

A: checkpointInterval: When set, it defines, in milliseconds, the interval between lease checkpoints. Default is always

after each Function call.

C: maxItemsPerInvocation: When set, this property sets the maximum number of items received per Function call. If

operations in the monitored collection are performed through stored procedures, transaction scope is preserved when

reading items from the change feed.

As a result, the number of items received could be higher than the specified value

so that the items changed by the same transaction are returned as part of one atomic batch.

Reference: https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-cosmosdb-v2-trigger

q10.

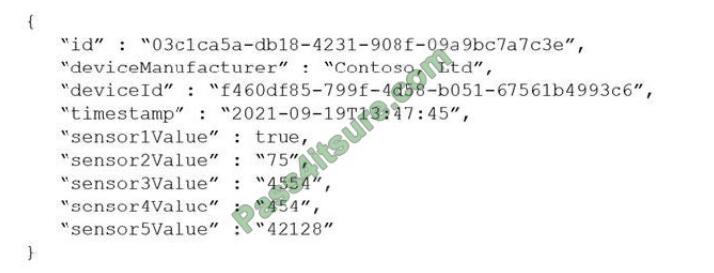

You are designing an Azure Cosmos DB Core (SQL) API solution to store data from IoT devices. Writes from the

devices will be occur every second. The following is a sample of the data.

The most common query lists the books for a given authorId.

You need to develop a non-relational data model for Azure Cosmos DB Core (SQL) API that will replace the relational

database. The solution must minimize latency and read operation costs.

What should you include in the solution?

A. Create a container for Author and a container for Book. In each Author document, embed bookId for each book by

the author. In each Book document embed authorIdof each author.

B. Create Author, Book, and Bookauthorlnk documents in the same container.

C. Create a container that contains a document for each Author and a document for each Book. In each Book

document, embed authorId.

D. Create a container for Author and a container for Book. In each Author document and Book document embed the

data from Bookauthorlnk.

Correct Answer: A

Store multiple entity types in the same container.

q12.

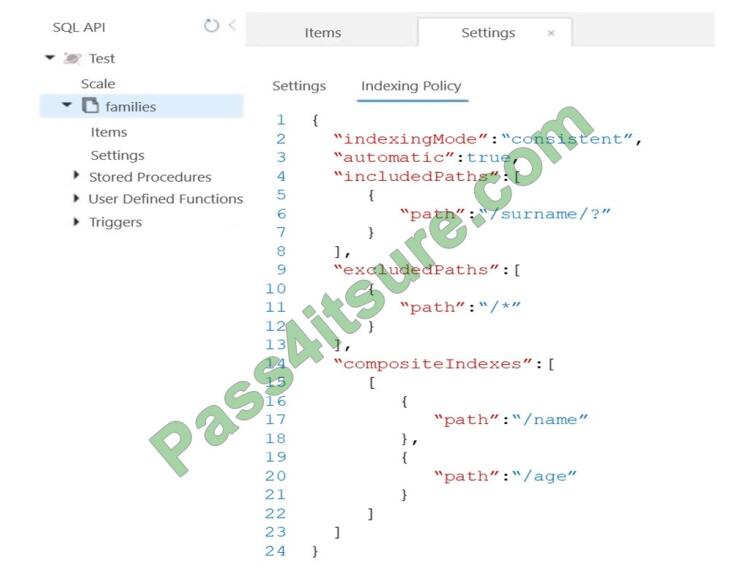

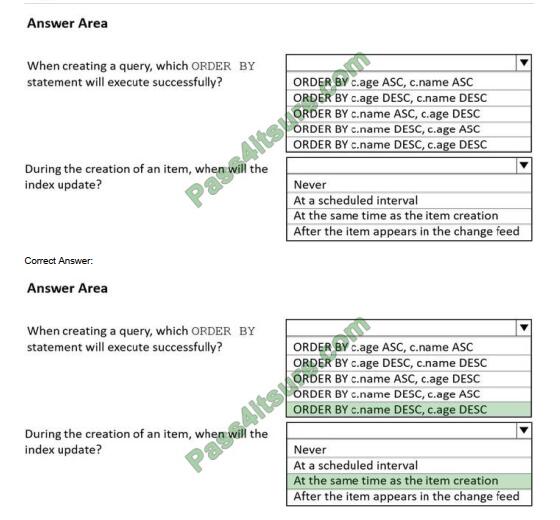

HOTSPOT

You have the indexing policy shown in the following exhibit.

Use the drop-down menus to select the answer choice that answers each question based on the information presented

in the graphic.

NOTE: Each correct selection is worth one point.

Hot Area:

Box 1: ORDER BY c.name DESC, c.age DESC

Queries that have an ORDER BY clause with two or more properties require a composite index.

The following considerations are used when using composite indexes for queries with an ORDER BY clause with two or

more properties:

1. If the composite index paths do not match the sequence of the properties in the ORDER BY clause, then the composite index can\’t support the query.

2. The order of composite index paths (ascending or descending) should also match the order in the ORDER BY clause.

3. The composite index also supports an ORDER BY clause with the opposite order on all paths.

Box 2: At the same time as the item creation Azure Cosmos DB supports two indexing modes:

1. Consistent: The index is updated synchronously as you create, update or delete items. This means that the consistency of your read queries will be the consistency configured for the account.

2. None: Indexing is disabled on the container.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/index-policy

The DP-420 exam questions in PDF format are free to download

Click this link for free DP-420 dumps pdf https://drive.google.com/file/d/1FUqlIicU8sv_M3l4y0fnlys9hgp0EBVO/view?usp=sharing [latest google drive]

Before you prepare for the DP-420 exam, you should be well prepared and use the latest version of the DP-420 dumps question to help you successfully pass the actual Designing and Implementing Cloud-Native Applications Using Microsoft Azure Cosmos DB exam.

Pass4itSure does just that, offering the latest version of DP-420 dumps question 2022 (The latest and most effective 51 exam questions to date) https://www.pass4itsure.com/dp-420.html (Updated: Mar 16, 2022) to help you pass the exam.