Pass4itSure Microsoft AI-102 exam dumps are designed with the help of Microsoft’s real exam content. You can get AI-102 VCE dumps and AI-102 PDF dumps from Pass4itSure! Check out the best and updated AI-102 exam questions by Pass4itSure AI-102 dumps https://www.pass4itsure.com/ai-102.html (Q&As: 54) (VCE and PDF), we are very confident that you will be successful on the Microsoft AI-102 exam.

Microsoft AI-102 exam questions in PDF file

Download those Pass4itSure AI-102 pdf from Google Drive: https://drive.google.com/file/d/1ILFQCEtoIVj3dR0HESB2BG0_gaDDM4m-/view?usp=sharing

Following are some Microsoft AI-102 exam questions for review (Microsoft AI-102 practice test 1-13)

QUESTION 1

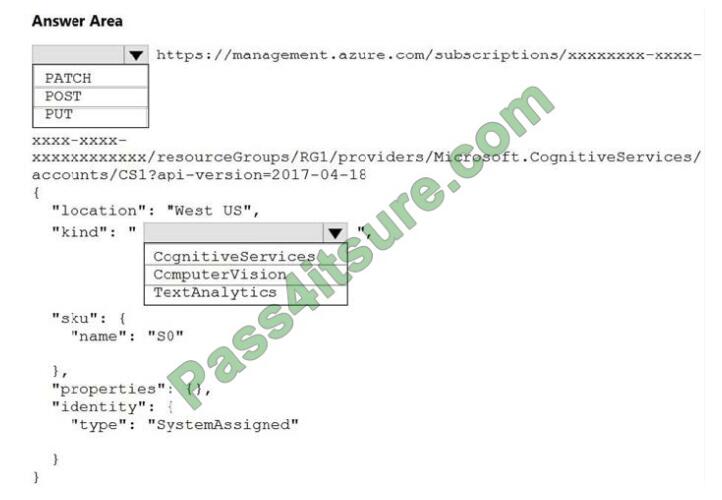

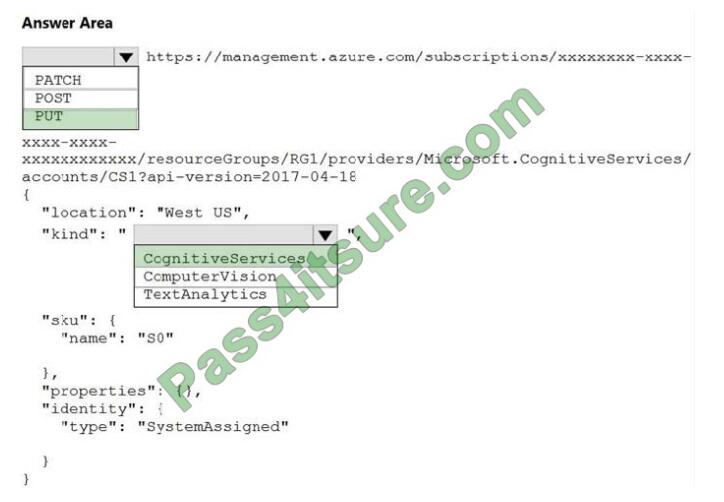

HOTSPOT

You need to create a new resource that will be used to perform sentiment analysis and optical character recognition

(OCR). The solution must meet the following requirements:

Use a single key and endpoint to access multiple services. Consolidate billing for future services that you might use.

Support the use of Computer Vision in the future.

How should you complete the HTTP request to create the new resource? To answer, select the appropriate options in

the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

Box 1: PUT

Sample Request: PUT https://management.azure.com/subscriptions/00000000-0000-0000-0000-000000000000/resourceGroups/test-rg/providers/Microsoft.DeviceUpdate/accounts/contoso?api-version=2020-03-01-preview

Incorrect Answers:

PATCH is for updates.

Box 2: CognitiveServices

Microsoft Azure Cognitive Services provide us to use its pre-trained models for various Business Problems related to

Machine Learning.

List of Different Services are:

Decision

Language (includes sentiment analysis)

Speech

Vision (includes OCR)

Web Search

Reference:

https://docs.microsoft.com/en-us/rest/api/deviceupdate/resourcemanager/accounts/create

https://www.analyticsvidhya.com/blog/2020/12/microsoft-azure-cognitive-services-api-for-ai-development/

QUESTION 2

You develop an application to identify species of flowers by training a Custom Vision model.

You receive images of new flower species.

You need to add the new images to the classifier.

Solution: You add the new images, and then use the Smart Labeler tool.

Does this meet the goal?

A. Yes

B. No

Correct Answer: B

The model need to be extended and retrained.

Note: Smart Labeler to generate suggested tags for images. This lets you label a large number of images more quickly

when training a Custom Vision model.

QUESTION 3

You build a language model by using a Language Understanding service. The language model is used to search for

information on a contact list by using an intent named FindContact.

A conversational expert provides you with the following list of phrases to use for training.

Find contacts in London.

Who do I know in Seattle?

Search for contacts in Ukraine.

You need to implement the phrase list in Language Understanding.

Solution: You create a new pattern in the FindContact intent.

Does this meet the goal?

A. Yes

B. No

Correct Answer: B

Instead use a new intent for location.

Note: An intent represents a task or action the user wants to perform. It is a purpose or goal expressed in a user\\’s

utterance.

Define a set of intents that corresponds to actions users want to take in your application.

Reference:

https://docs.microsoft.com/en-us/azure/cognitive-services/luis/luis-concept-intent

QUESTION 4

You are developing the smart e-commerce project.

You need to implement autocompletion as part of the Cognitive Search solution.

Which three actions should you perform? Each correct answer presents part of the solution. (Choose three.)

NOTE: Each correct selection is worth one point.

A. Make API queries to the autocomplete endpoint and include suggesterName in the body.

B. Add a suggester that has the three product name fields as source fields.

C. Make API queries to the search endpoint and include the product name fields in the searchFields query parameter.

D. Add a suggester for each of the three product name fields.

E. Set the searchAnalyzer property for the three product name variants.

F. Set the analyzer property for the three product name variants.

Correct Answer: ABF

Scenario: Support autocompletion and autosuggestion based on all product name variants.

A: Call a suggester-enabled query, in the form of a Suggestion request or Autocomplete request, using an API. API

usage is illustrated in the following call to the Autocomplete REST API.

POST /indexes/myxboxgames/docs/autocomplete?searchandapi-version=2020-06-30

{ “search”: “minecraf”, “suggesterName”: “sg”

}

B:

In Azure Cognitive Search, typeahead or “search-as-you-type” is enabled through a suggester. A suggester provides a

list of fields that undergo additional tokenization, generating prefix sequences to support matches on partial terms. For

example, a suggester that includes a City field with a value for “Seattle” will have prefix combinations of “sea”, “seat”,

“seatt”, and “seattl” to support typeahead.

F.

Use the default standard Lucene analyzer (“analyzer”: null) or a language analyzer (for example, “analyzer”:

“en.Microsoft”) on the field.

Reference: https://docs.microsoft.com/en-us/azure/search/index-add-suggesters

QUESTION 5

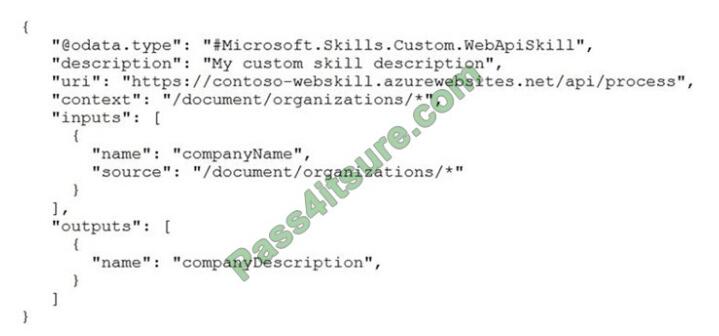

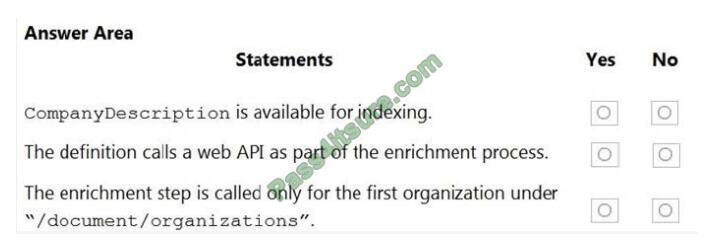

HOTSPOT

You are building an Azure Cognitive Search custom skill.

You have the following custom skill schema definition.

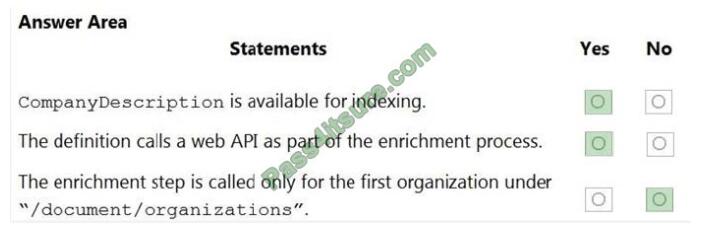

For each of the following statements, select Yes if the statement. Otherwise, select No. NOTE: Each correct selection is

worth one point.

Hot Area:

Correct Answer:

Box 1: Yes

Once you have defined a skillset, you must map the output fields of any skill that directly contributes values to a given

field in your search index.

Box 2: Yes

The definition is a custom skill that calls a web API as part of the enrichment process.

Box 3: No

For each organization identified by entity recognition, this skill calls a web API to find the description of that

organization.

Reference:

https://docs.microsoft.com/en-us/azure/search/cognitive-search-output-field-mapping

QUESTION 6

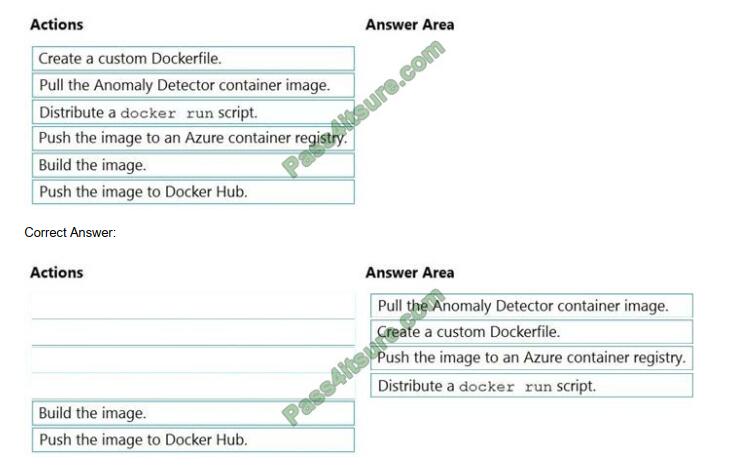

DRAG DROP

You plan to use containerized versions of the Anomaly Detector API on local devices for testing and in on-premises

datacenters.

You need to ensure that the containerized deployments meet the following requirements:

Prevent billing and API information from being stored in the command-line histories of the devices that run the

container.

Control access to the container images by using Azure role-based access control (Azure RBAC).

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to

the answer area and arrange them in the correct order. (Choose four.)

NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select.

Select and Place:

Step 1: Pull the Anomaly Detector container image.

Step 2: Create a custom Dockerfile

Step 3: Push the image to an Azure container registry.

To push an image to an Azure Container registry, you must first have an image.

Step 4: Distribute the docker run script

Use the docker run command to run the containers.

Reference:

https://docs.microsoft.com/en-us/azure/container-registry/container-registry-intro

QUESTION 7

You have a Video Indexer service that is used to provide a search interface over company videos on your company\\’s

website.

You need to be able to search for videos based on who is present in the video.

What should you do?

A. Create a person model and associate the model to the videos.

B. Create person objects and provide face images for each object.

C. Invite the entire staff of the company to Video Indexer.

D. Edit the faces in the videos.

E. Upload names to a language model.

Correct Answer: A

Video Indexer supports multiple Person models per account. Once a model is created, you can use it by providing the

model ID of a specific Person model when uploading/indexing or reindexing a video. Training a new face for a video

updates the specific custom model that the video was associated with.

Note: Video Indexer supports face detection and celebrity recognition for video content. The celebrity recognition feature

covers about one million faces based on commonly requested data source such as IMDB, Wikipedia, and top LinkedIn

influencers. Faces that aren\\’t recognized by the celebrity recognition feature are detected but left unnamed. Once you

label a face with a name, the face and name get added to your account\\’s Person model. Video Indexer will then

recognize this face in your future videos and past videos.

Reference: https://docs.microsoft.com/en-us/azure/media-services/video-indexer/customize-person-model-with-api

QUESTION 8

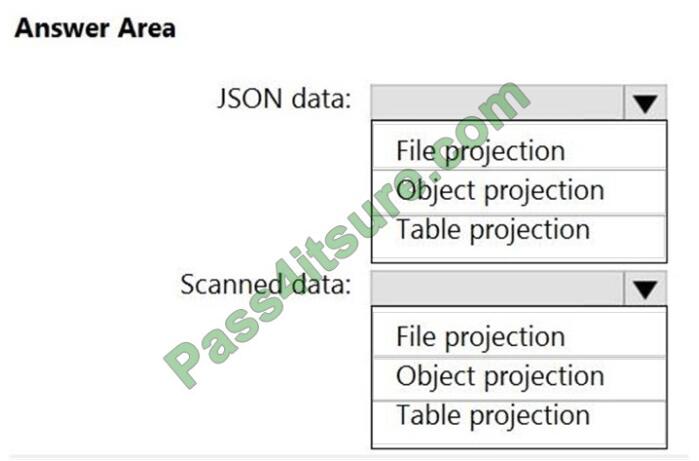

HOTSPOT

You are creating an enrichment pipeline that will use Azure Cognitive Search. The knowledge store contains

unstructured JSON data and scanned PDF documents that contain text.

Which projection type should you use for each data type? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

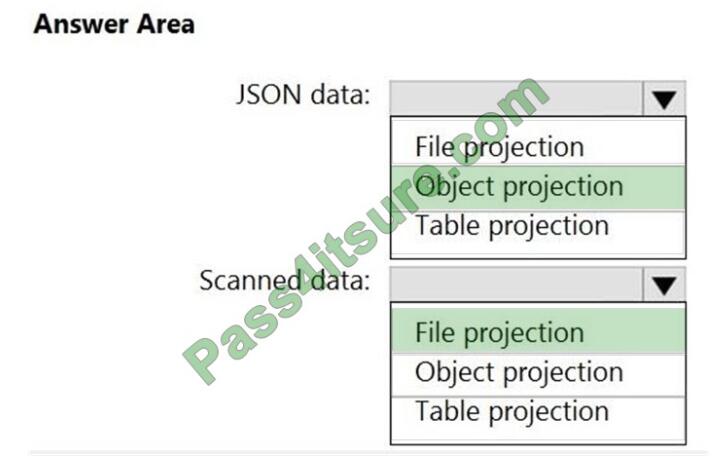

Correct Answer:

Box 1: Object projection

Object projections are JSON representations of the enrichment tree that can be sourced from any node.

Box 2: File projection

File projections are similar to object projections and only act on the normalized_images collection.

Reference:

https://docs.microsoft.com/en-us/azure/search/knowledge-store-projection-overview

QUESTION 9

HOTSPOT

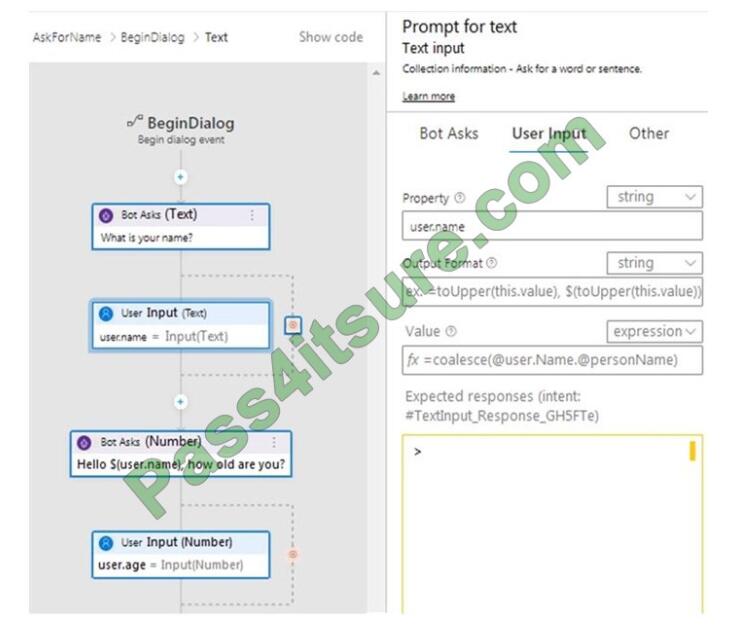

You are building a chatbot by using the Microsoft Bot Framework Composer.

You have the dialog design shown in the following exhibit.

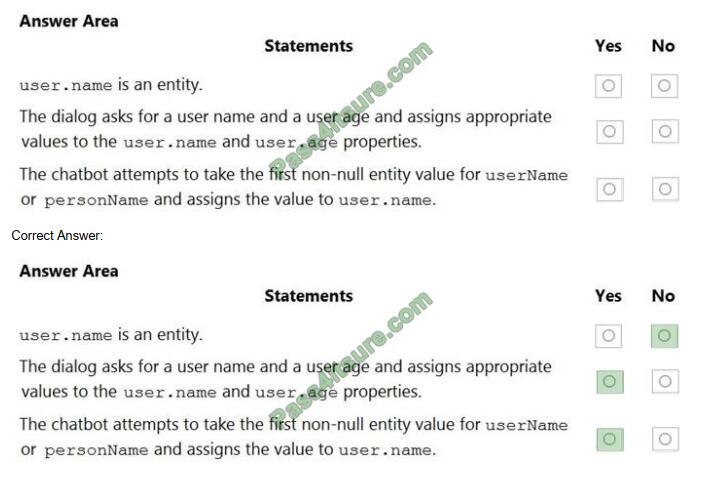

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct

selection is worth one point.

Hot Area:

Box 1: No

User.name is a property.

Box 2: Yes Box 3: Yes The coalesce() function evaluates a list of expressions and returns the first non-null (or nonempty for string) expression.

Reference: https://docs.microsoft.com/en-us/composer/concept-language-generation https://docs.microsoft.com/enus/azure/data-explorer/kusto/query/coalescefunction

QUESTION 10

You are building a Language Understanding model for an e-commerce platform.

You need to construct an entity to capture billing addresses.

Which entity type should you use for the billing address?

A. machine learned

B. Regex

C. geographyV2

D. Pattern.any

E. list

Correct Answer: B

A regular expression entity extracts an entity based on a regular expression pattern you provide. It ignores case and

ignores cultural variant. Regular expression is best for structured text or a predefined sequence of alphanumeric values

that are expected in a certain format. For example:

Incorrect Answers:

C: The prebuilt geographyV2 entity detects places. Because this entity is already trained, you do not need to add

example utterances containing GeographyV2 to the application intents. GeographyV2 entity is supported in English

culture. The geographical locations have subtypes:

D: Pattern.any is a variable-length placeholder used only in a pattern\\’s template utterance to mark where the entity

begins and ends.

E: A list entity represents a fixed, closed set of related words along with their synonyms. You can use list entities to

recognize multiple synonyms or variations and extract a normalized output for them. Use the recommend option to see

suggestions for new words based on the current list.

Reference: https://docs.microsoft.com/en-us/azure/cognitive-services/luis/luis-concept-entity-types

QUESTION 11

You need to build a chatbot that meets the following requirements:

Supports chit-chat, knowledge base, and multilingual models Performs sentiment analysis on user messages Selects

the best language model automatically

What should you integrate into the chatbot?

A. QnA Maker, Language Understanding, and Dispatch

B. Translator, Speech, and Dispatch

C. Language Understanding, Text Analytics, and QnA Maker

D. Text Analytics, Translator, and Dispatch

Correct Answer: C

Language Understanding: An AI service that allows users to interact with your applications, bots, and IoT devices by

using natural language.

QnA Maker is a cloud-based Natural Language Processing (NLP) service that allows you to create a natural

conversational layer over your data. It is used to find the most appropriate answer for any input from your custom

knowledge base

(KB) of information.

Text Analytics: Mine insights in unstructured text using natural language processing (NLP)–no machine learning

expertise required. Gain a deeper understanding of customer opinions with sentiment analysis. The Language Detection

feature

of the Azure Text Analytics REST API evaluates text input

Incorrect Answers:

A, B, D: Dispatch uses sample utterances for each of your bot\\’s different tasks (LUIS, QnA Maker, or custom), and

builds a model that can be used to properly route your user\\’s request to the right task, even across multiple bots.

Reference:

https://azure.microsoft.com/en-us/services/cognitive-services/text-analytics/ https://docs.microsoft.com/enus/azure/cognitive-services/qnamaker/overview/overview

QUESTION 12

You build a language model by using a Language Understanding service. The language model is used to search for

information on a contact list by using an intent named FindContact.

A conversational expert provides you with the following list of phrases to use for training.

Find contacts in London.

Who do I know in Seattle?

Search for contacts in Ukraine.

You need to implement the phrase list in Language Understanding.

Solution: You create a new intent for location.

Does this meet the goal?

A. Yes

B. No

Correct Answer: A

An intent represents a task or action the user wants to perform. It is a purpose or goal expressed in a user\\’s utterance.

Define a set of intents that corresponds to actions users want to take in your application.

Reference:

https://docs.microsoft.com/en-us/azure/cognitive-services/luis/luis-concept-intent

QUESTION 13

You have a chatbot that was built by using the Microsoft Bot Framework.

You need to debug the chatbot endpoint remotely.

Which two tools should you install on a local computer? Each correct answer presents part of the solution. (Choose

two.)

NOTE: Each correct selection is worth one point.

A. Fiddler

B. Bot Framework Composer

C. Bot Framework Emulator

D. Bot Framework CLI

E. ngrok

F. nginx

Correct Answer: CE

Bot Framework Emulator is a desktop application that allows bot developers to test and debug bots, either locally or

remotely.

ngrok is a cross-platform application that “allows you to expose a web server running on your local machine to the

internet.” Essentially, what we\\’ll be doing is using ngrok to forward messages from external channels on the web

directly to our local machine to allow debugging, as opposed to the standard messaging endpoint configured in the

Azure portal.

Reference: https://docs.microsoft.com/en-us/azure/bot-service/bot-service-debug-emulator

PS.

Thanks for reading! Hope the newest AI-102 exam dumps can help you in your exam. Get full AI-102 exam questions to try Pass4itSure AI-102 dumps! AI-102 dumps in VCE and PDF are here: https://www.pass4itsure.com/ai-102.html (Updated: Jun 26, 2021).

Download Pass4itSure AI-102 dumps pdf from Google Drive: https://drive.google.com/file/d/1ILFQCEtoIVj3dR0HESB2BG0_gaDDM4m-/view?usp=sharing (AI-102 Exam Questions)